Drone-based Object Counting by Spatially Regularized Regional Proposal Networks

Meng-Ru Hsieh, Yen-Liang Lin, Winston Hsu

Abstract

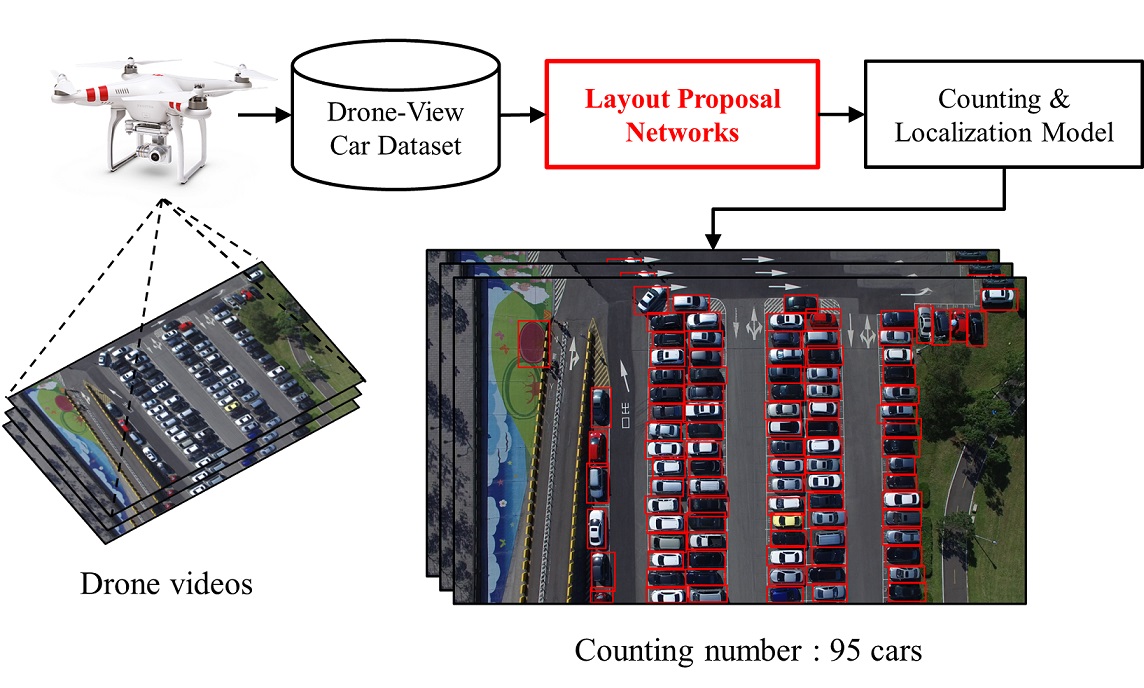

Existing counting methods often adopt regression-based approaches and cannot precisely localize the target objects, which hinders the further analysis (e.g., high-level understanding and fine-grained classification). In addition, most of prior work mainly focus on counting objects in static environments with fixed cameras. Motivated by the advent of unmanned flying vehicles (i.e., drones), we are interested in detecting and counting objects in such dynamic environments. We propose Layout Proposal Networks (LPNs) and spatial kernels to simultaneously count and localize target objects (e.g., cars) in videos recorded by the drone. Different from the conventional region proposal methods, we leverage the spatial layout information (e.g., cars often park regularly) and introduce these spatially regularized constraints into our network to improve the localization accuracy. To evaluate our counting method, we present a new large-scale car parking lot dataset (CARPK) that contains nearly 90,000 cars captured from different parking lots. To the best of our knowledge, it is the first and the largest drone view dataset that supports object counting, and provides the bounding box annotations.Publication

-

Meng-Ru Hsieh, Yen-Liang Lin, Winston H. Hsu. Drone-based Object Counting by Spatially Regularized Regional Proposal Networks, ICCV 2017 [arXiv pdf] [bibtex]

@inproceedings{Hsieh_2017_ICCV,

Author = {Meng-Ru Hsieh and Yen-Liang Lin and Winston H. Hsu},

Booktitle = {The IEEE International Conference on Computer Vision (ICCV)},

Title = {Drone-based Object Counting by Spatially Regularized Regional Proposal Networks},

Year = {2017},

organization={IEEE}

}

Dataset

Please notice that this dataset is made available for academic research purpose only. Some images are collected from the PUCPR of PKLot dataset, and the copyright belongs to the original owners. If any of the images belongs to you and you would like it removed, please kindly inform us, we will remove it from our dataset immediately.

Car Parking Lot Dataset (CARPK)

The Car Parking Lot Dataset (CARPK) contains nearly 90,000 cars from 4 different parking lots collected by means of drone (PHANTOM 3 PROFESSIONAL). The images are collected with the drone-view at approximate 40 meters height. Besides, we complete the annotations for all cars in a single image from partial PUCPR dataset, called PUCPR+ dataset, where also has nearly 17,000 cars in total. This is a large-scale dataset focus for car counting in the scenes of diverse parking lots. The image set is annotated by bounding box per car. All labeled bounding boxes have been well recorded with the top-left points and the bottom-right points. It is supporting object counting, object localizing, and further investigations with the annotation format in bounding boxes. The downloaded dataset contain following structures:- Car Parking Lot Dataset (CARPK) - contains information of the 89,777 cars

- images - drone-view images (*.png) with the name of belonging parking lot

- annotations - text files (*.txt) with the label of cars per line, and file name is correspoding to the image file

- Pontifical Catholic University of Parana+ Dataset (PUCPR+) - contains information of the 16,456 cars

- images - 10th-floor-view images (.*jpg) of parking lot from a building in PUCPR

- annotations - text files (*.txt) with the label of cars per line, and file name is correspoding to the image file

- Additional Tools

- Bounding Box Drawing Tool - script for visualization of bounding boxes

- Counting Evaluation Tool - script for estimating MAE and RMSE

- All files are encoded with a password. Please, read and fill in the online EULA form before downloading the database. Once we've received the EULA form, we will e-mail you the password for the files.

- Download the CARPK dataset, PUCPR+ dataset, and all related materials from [here] (2.0G)

- If you have any problems downloading the data, do not hesitate to contact us at my e-mail address.

Notes

*Cars that have located on the edge of the image are included as long as the marked region can be recognized and sure the instance is a car.*The images of PUCPR+ dataset are filmed from high story building in the original PKLot dataset. Hence, the view of images are a little different from the drone-view images.

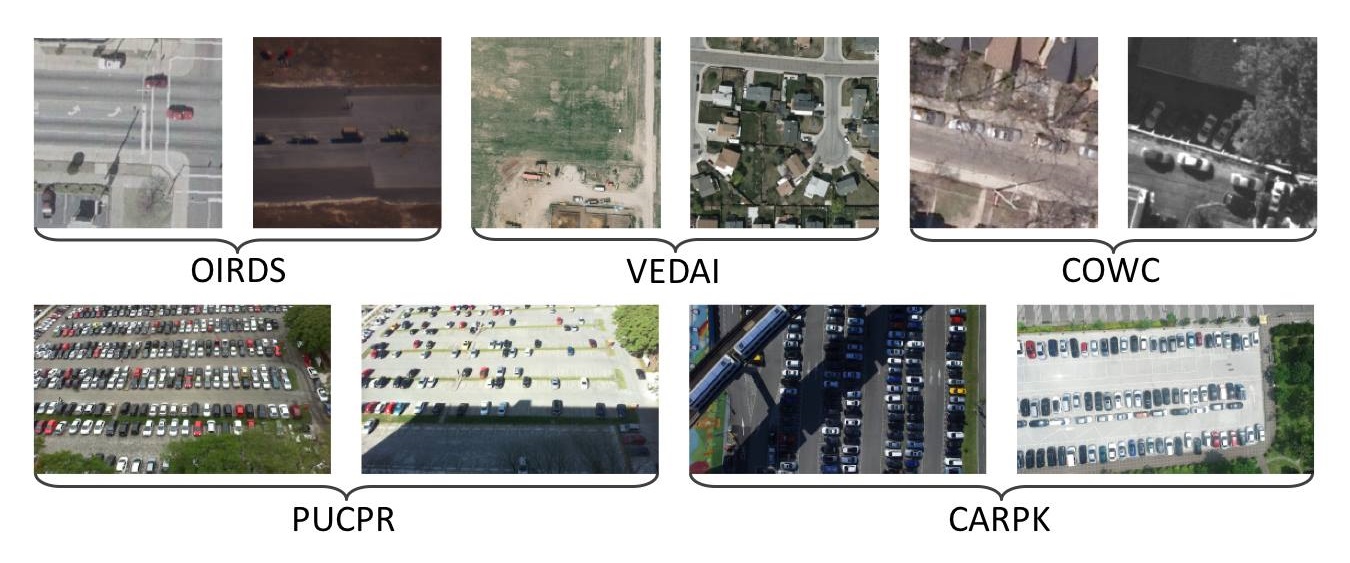

Comparison of aerial view car-related datasets

In contrast to the PUCPR dataset, our dataset supports a counting task with bounding box annotations for all cars in a single scene. Most important of all, compared to other car datasets, our CARPK is the only dataset in drone-based scenes and also has a large enough number in order to provide sufficient training samples for deep learning models.| Dataset | Sensor | Multi Scenes | Resolution | Annotation Format | Car Numbers | Counting Support |

| OIRDS | satellite | ✓ | low | bounding box | 180 | ✓ |

| VEDAI | satellite | ✓ | low | bounding box | 2,950 | ✓ |

| COWC | aerial | ✓ | low | car center point | 32,716 | ✓ |

| PUCPR | camera | ✕ | high | bounding box | 192,216 | ✕ |

| CARPK | drone | ✓ | high | bounding box | 89,777 | ✓ |

Experimental Results

Car proposal performance on PUCPR+ and CARPK

Here we report the Average Recall (AR), which could simultaneously evaluate the recall and the localization accuracy, for the region proposal methods. Note that the revised Region Proposal Network (RPN + small) is training with more proper default boxe sizes. The result shows that our proposed methods Layout Proposal Network (LPN) with regularized constraints outperforms the RPN and also the revised RPN (e.g., 14.1% better in 300 proposals and 8.42% better in 500 proposals) on the two different datasets. It indicates that the prior layout knowledge could potentially benefit the outcome by regularly searching instances in images.| Dataset | PUCPR+ | CARPK | ||||||||

| Methods | AR@100 | AR@300 | AR@500 | AR@700 | AR@1000 | AR@100 | AR@300 | AR@500 | AR@700 | AR@1000 |

| Region Proposal Network | 3.2% | 9.1% | 13.9% | 17.4% | 21.2% | 11.4% | 27.9% | 34.3% | 37.4% | 39.2% |

| Region Proposal Network + small | 20.5% | 43.2% | 53.4% | 57.3% | 59.9% | 31.1% | 46.5% | 50.0% | 51.8% | 53.4% |

| Layout Proposal Network (ours) | 23.1% | 49.3% | 57.9% | 60.7% | 62.5% | 34.7% | 51.2% | 54.5% | 56.1% | 57.5% |

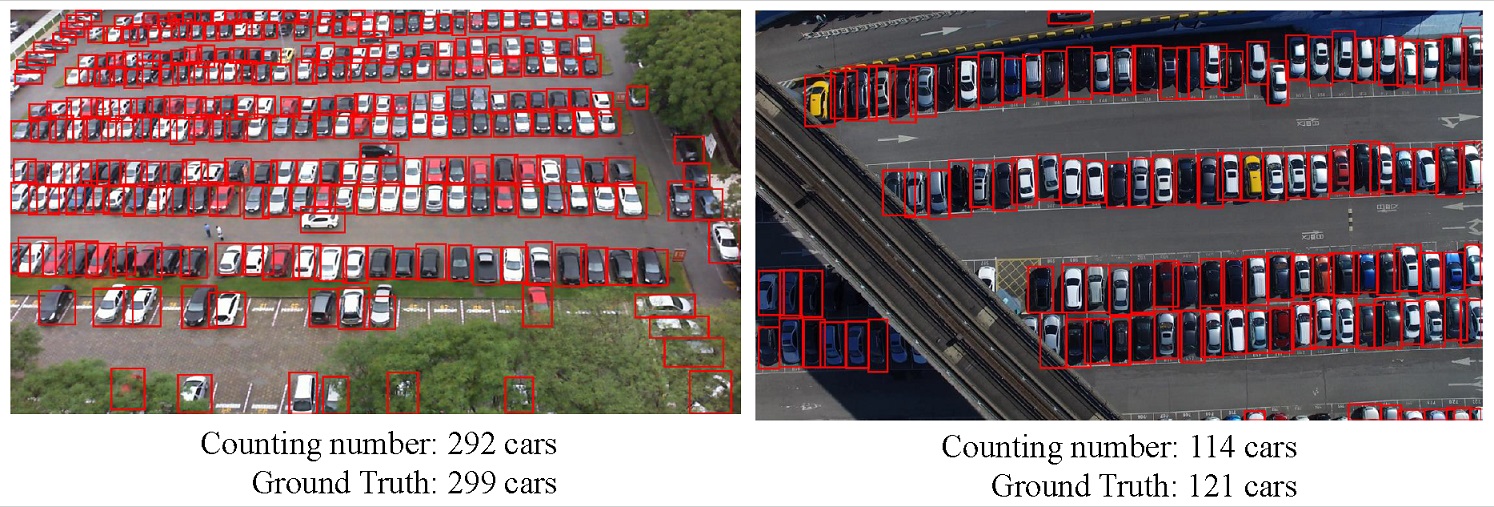

Car counting performance on PUCPR+ and CARPK

We compared our Object Counting CNN Model, which is combined the proposed method LPN, to the other counting methods on the two datasets. For fairness comparison with the One-Look Regression method, the number of proposals used in Faster R-CNN and our Object Counting CNN model are 400 and 200 in PUCPR+ and CARPK repectively. The two metrics, Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE), are employed for evaluating the performance of counting methods. The experiment result shows that our counting approach is reliably effective and has the best MAE and RMSE even in the cross-scene estimation.| Dataset | PUCPR+ | CARPK | ||

| Method | MAE | RMSE | MAE | RMSE |

| YOLO [1] | 156.00 | 200.42 | 48.89 | 57.55 |

| Faster R-CNN [2] | 111.40 | 149.35 | 47.45 | 57.39 |

| One-Look Regression [3] | 21.88 | 36.73 | 59.46 | 66.84 |

| Our Object Counting CNN Model | 22.76 | 34.46 | 23.80 | 36.79 |

Reference

1. Redmon, J., Divvala, S., Girshick, R., & Farhadi, A. (2016). You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 779-788).2. Ren, S., He, K., Girshick, R., & Sun, J. (2015). Faster r-cnn: Towards real-time object detection with region proposal networks. In Advances in neural information processing systems (pp. 91-99).

3. Mundhenk, T. N., Konjevod, G., Sakla, W. A., & Boakye, K. (2016, October). A Large Contextual Dataset for Classification, Detection and Counting of Cars with Deep Learning. In European Conference on Computer Vision (pp. 785-800). Springer International Publishing.

Demo Video

The demo video for our Object Counting CNN Model over the parking lot of Taipei Zoo.

Below is the example of of car counting and localization results on the PUCPR+ dataset (left) and CARPK dataset (right).

Acknowledgement

This work was supported in part by the Ministry of Science and Technology, Taiwan, under Grant MOST 104-2622-8-002-002 and MOST 105-2218-E-002-032, and in part by MediaTek Inc, and grants from NVIDIA and the NVIDIA DGX-1 AI Supercomputer.